ECREA 2022

“Coordinated Inauthentic Behavior”: How Meta Shapes the Discussion of Mis/Disinformation on Facebook

Edward Hurcombe, Daniel Angus, Axel Bruns, Ehsan Dehghan, and Laura Vodden

- 24 Oct. 2022 – Paper presented by Dan Angus at the ECREA 2022 post-conference ‘Digital Media and Information Disorders’, Aarhus

Presentation Slides

Abstract

Introduction

This paper examines Meta’s public communication on issues of problematic online content, via the ‘Meta Newsroom’. Through content analysis, we seek to understand Meta’s framing of, and their specific interventions regarding the issue of problematic content on the Facebook platform. This analysis takes place against the backdrop of a larger study of problematic online content on the Facebook platform, that will be presented at ECREA. This paper is complementary in seeking to understand Meta’s own platform policy development and approaches to curbing problematic information dissemination.

The data for this study was collected by web-scraping posts in the Meta Newsroom on the topic of content moderation between 2016-2021. These posts were identified through a manual content analysis, which classified posts into pre-determined themes e.g., posts reporting on platform-based issues to do with ‘bias’, ‘content quality’, ‘inciting violence’, and so on. A timeline of key externalities was also developed for comparative purposes.

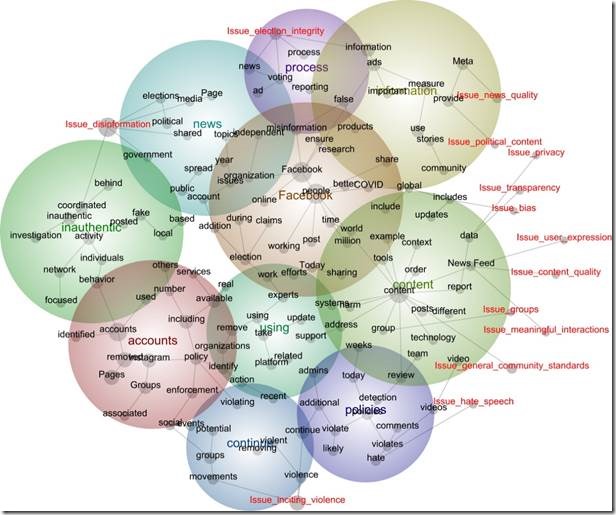

We used the proprietary topic modelling tool Leximancer (Smith, 2003; Smith & Humphreys, 2006) to identify the emergent concepts within the posts (see Figure 1). Preliminary findings suggest the prominence, for example, of concepts such as “technology” and “hate” in Newsroom posts discussing “community standards”, and “ads” and “election” in posts on the topic of “transparency”. In posts discussing issues to do with “disinformation”, the key words “inauthentic”, “coordinated”, and “behavior” stand out. These keywords appear to indicate, for instance, the visibility of “hate” in issues to do with what is and is not acceptable by Meta’s “community”, and the prominence of political advertising during elections when it comes to issues regarding “transparency” on Meta’s platforms. And lastly, posts within the “disinformation” category appear to demonstrate favored terminology used by Meta. We are continuing to develop a temporal analysis from these preliminary findings.

This work is ongoing, and as a work in progress we are yet to fully examine the temporal development of topics over the 5-year timeframe. In the lead up to ECREA we will be segmenting the data collected according to specific epochs (four quarters per year over 5 years) and evaluating the evolution of content moderation activities by Facebook, as communicated via the Newsroom.

Figure 1: Leximancer concept map, coloured theme circles group together highly connected concepts (indicated in black text), colour indicates conceptual prominence, most prominent = red, yellow, green, blue, violet = least prominent. Red tags indicate the affinity between the emergent concepts from the text posts and manual codes applied to these posts.

Acknowledgements

Data for this study is provided by CrowdTangle, a public insights tool owned and operated by Meta.

References

Smith, A. E. (2003). Automatic Extraction of Semantic Networks from Text using Leximancer.’ Proceedings of HLT-NAACL: 23-24.

Smith, A. E., & Humphreys, M. S. (2006). Evaluation of unsupervised semantic mapping of natural language with Leximancer concept mapping. Behavior research methods, 38(2), 262-279.